[What is the problem and why does it matters?] AI models are increasingly embedded in decision-making systems, yet most are designed and evaluated in isolation from how they will be deployed, governed, and used in practice. Especially in high-stakes domains such as healthcare and sustainability, context—including operational and regulatory constraints—shapes trust, adoption, and impact. This gap limits how much value organizations can reliably extract from AI in practice.

[What is trustworthy AI?] Trustworthy AI refers to AI systems that meet core principles such as accountability, transparency, fairness, safety and robustness, privacy, human oversight, and societal and environmental well-being. These principles define what it means for AI systems to be deployable and usable in practice, and they are increasingly formalized through regulatory frameworks such as the EU AI Act and the US AI Bill of Rights.

[What is my approach?] I view trustworthiness as both a modeling and a systems problem. From a modeling viewpoint, I build models that are inherently trustworthy by design—with properties such as interpretability or stability chosen to match the needs of the application. From a systems viewpoint, I study how deployment and governance decisions shape downstream behavior and outcomes. Methodologically, I use tools from optimization, operations research, and machine learning to develop theory and algorithms, and I study their performance once embedded in real operational processes, including (but not limited to) healthcare delivery and sustainability planning.

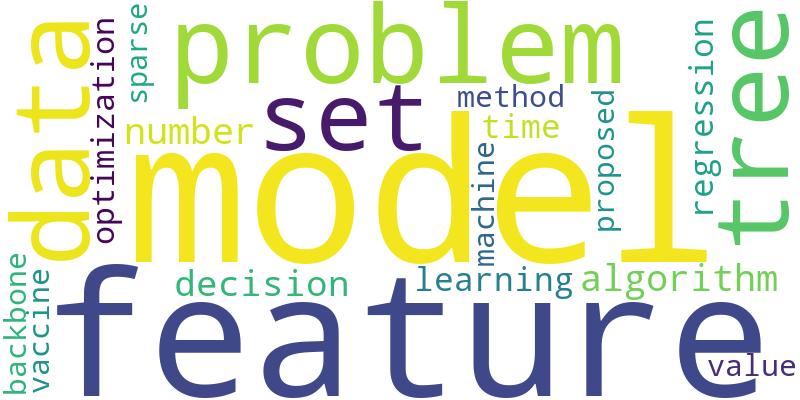

Figure: List of 20 most common keywords from my first 10 papers